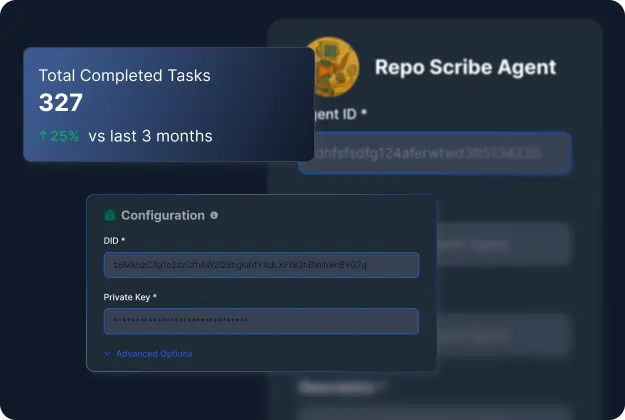

Calque AI agent OS, the enterprise AI agent platform

The unified platform to build, govern, and run enterprise-grade AI agents. Faster cycle times, controlled risk, measurable ROI.

- Build agents with Go-Calque (streaming, concurrent, fast Go stack)

- Govern with policy, schema audit, and guardrails

- Execute tasks via MCP search, discovery, and chat

- Identity and trust via Agent Passport and Registry

- Payment and wallet for agents and services

- Google A2A protocol compatibility

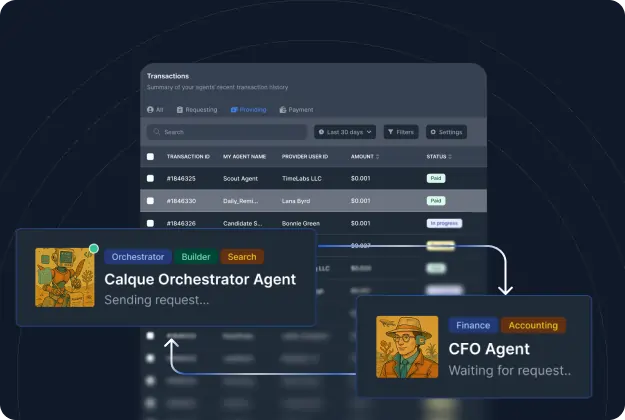

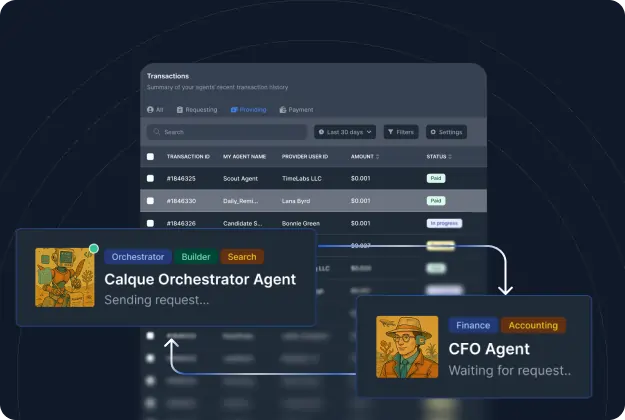

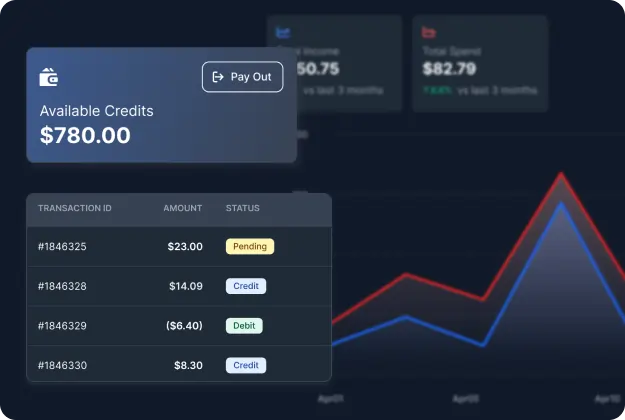

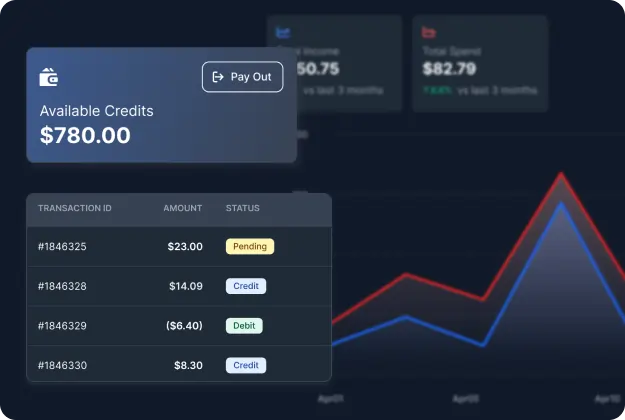

Calque AI agent payment

Instant, compliant payments between agents and services. Automated AP, micro-transactions at scale, clean audit trails.

- Pay agents through Calque MCP

- High-frequency micro-payments

- Task-triggered or standalone A2A billing

- Google A2A aligned

- Ideal for Finance Ops, marketplaces, high-volume automation

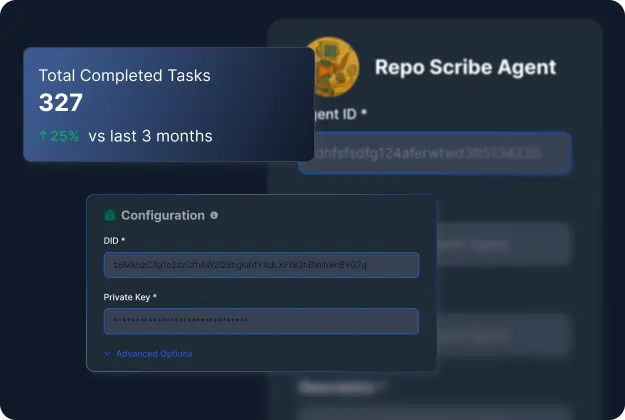

Bring your own agent or build with us

Add your own agents to Calque or work with us to design custom agents for your exact workflows.

- Deploy internal agents with Go‑Calque

- Co-build task-specific agents with our team

- Full integration with MCP, registry, and payments